5-th year Ph.D. Candidate

Department of Computer Science, Florida State University

Hansong Zhou is a 5-th year Ph.D. in the Department of Computer Science at the Florida State University. He is under the supervision of Prof. Xiaonan Zhang. He obtained his master degress in 2021 from the Department of Electrical & Computer Engineering at the University of Florida, and his bachelor degree in 2019 from the Department of Electrical and Information Engineering at Xi'an Jiaotong University. His work contributed to the research for the efficient and reliable Edge Intelligence, particularly for rapid development in large-scale scenarios and Large Language Models (LLM) inference acceleration.

Hansong's Research focuses on distributed LLM training/inference on edges, LLM fine-tuning for domain-specific expert agents and large-scale collaborative edge systems. Currently, He is owning the end-to-end delivery of a LLM-based AI Health Coach for rural health, spanning data pipeline design, model fine-tuning, prompt engineering, and final application deployment.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Florida State UniversityDepartment of Computer Science

Florida State UniversityDepartment of Computer Science

Ph.D. CandidateAug. 2021 - present -

University of FloridaDepartment of Electrical & Computer Engineering

University of FloridaDepartment of Electrical & Computer Engineering

M.S. in Computer EngineeringSep. 2019 - Jul. 2021 -

Xi'an Jiaotong UniversityDepartment of Electrical and Information Engineering

Xi'an Jiaotong UniversityDepartment of Electrical and Information Engineering

B.S. in Information EngineeringSep. 2015 - Jul. 2019

Experience

-

University of Electronic Science and Technology of ChinaSchool of Information and Communication Engineering

University of Electronic Science and Technology of ChinaSchool of Information and Communication Engineering

Research InternJuly. 2018 - Dec. 2018

Honors & Awards

-

Dean's Award for Doctoral Excellence (DADE), Florida State University2023 - 2025

Selected Publications (view all )

Fairness-Oracular MARL with Competitor-Aware Signals for Collaborative Inference

Hansong Zhou, Xiaonan Zhang

NeurIPS '25 - AI4NextG: The Thirty-Ninth Annual Conference on Neural Information Processing Systems

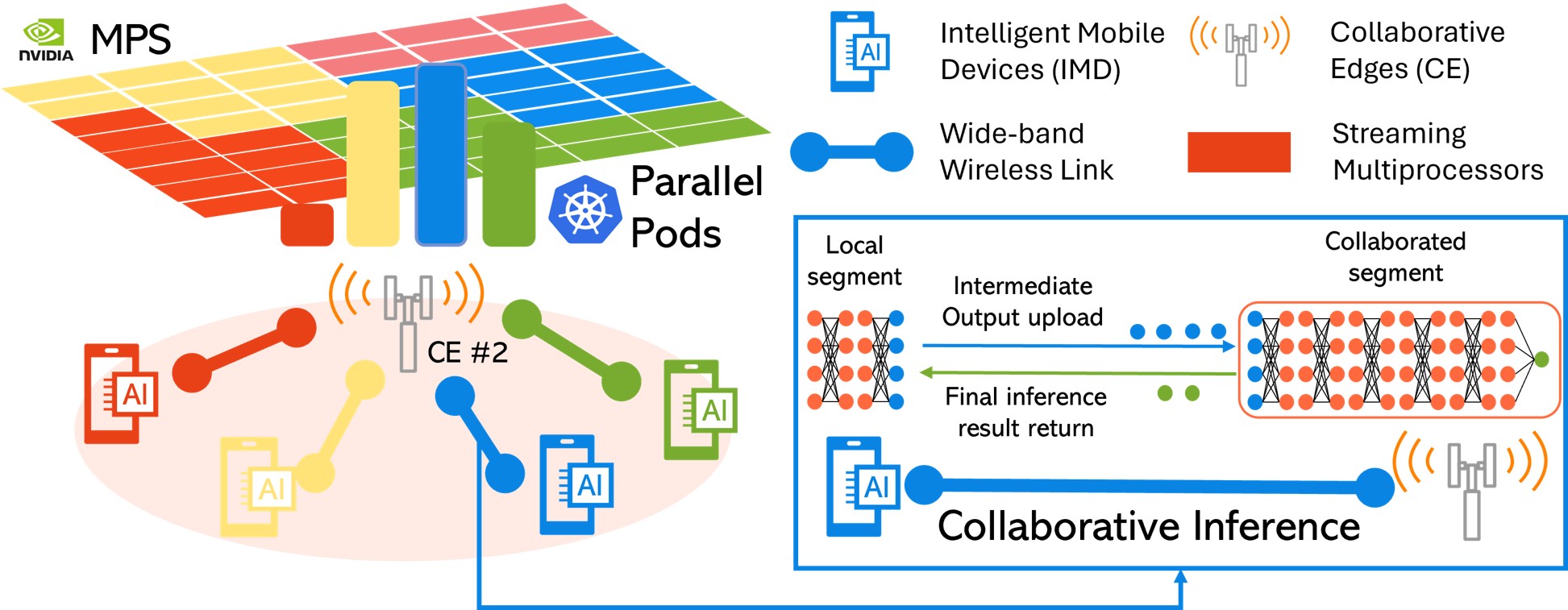

Collaborative inference (CI) in NextG networks enables battery-powered devices to collaborate with nearby edges on deep learning inference. The fairness issue in a multi-device multi-edge (M2M) CI system remains underexplored. Mean-field multi-agent reinforcement learning (MFRL) is a promising solution for its low complexity and adaptability to system dynamics. However, the…

Full abstract

Fairness-Oracular MARL with Competitor-Aware Signals for Collaborative Inference

Hansong Zhou, Xiaonan Zhang

NeurIPS '25 - AI4NextG: The Thirty-Ninth Annual Conference on Neural Information Processing Systems

Collaborative inference (CI) in NextG networks enables battery-powered devices to collaborate with nearby edges on deep learning inference. The fairness issue in a multi-device multi-edge (M2M) CI system remains underexplored. Mean-field multi-agent reinforcement learning (MFRL) is a promising solution for its low complexity and adaptability to system dynamics. However, the…

Full abstract

Similarity-Guided Rapid Deployment of Federated Intelligence Over Heterogeneous Edge Computing

Hansong Zhou, Jingjing Fu, Yukun Yuan, Linke Guo, Xiaonan Zhang

INFOCOM '25: IEEE Conference on Computer Communications

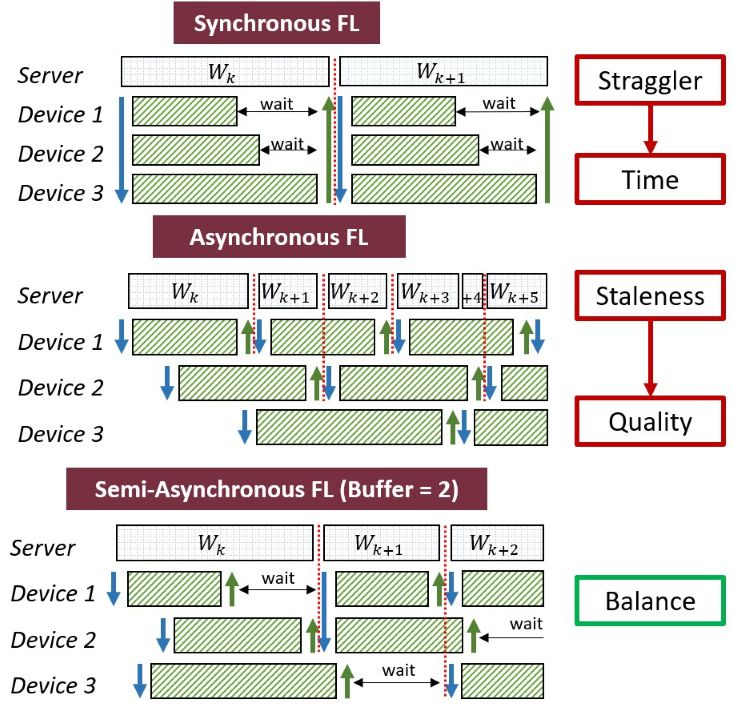

Edge computing is envisioned to enable rapid federated intelligence on edge devices to satisfy their dynamically changing AI service demands. Semi-Asynchronous FL (Semi-Async FL) enables distributed learning in an asynchronous manner, where the server does not have to wait all local models for improving the global model. Hence, it takes…

Full abstract

Similarity-Guided Rapid Deployment of Federated Intelligence Over Heterogeneous Edge Computing

Hansong Zhou, Jingjing Fu, Yukun Yuan, Linke Guo, Xiaonan Zhang

INFOCOM '25: IEEE Conference on Computer Communications

Edge computing is envisioned to enable rapid federated intelligence on edge devices to satisfy their dynamically changing AI service demands. Semi-Asynchronous FL (Semi-Async FL) enables distributed learning in an asynchronous manner, where the server does not have to wait all local models for improving the global model. Hence, it takes…

Full abstract

Waste not, want not: service migration-assisted federated intelligence for multi-modality mobile edge computing

Hansong Zhou, Shaoying Wang, Chutian Jiang, Linke Guo, Yukun Yuan, Xiaonan Zhang

MobiHoc '23: Proceedings of the Twenty-fourth International Symposium on Theory, Algorithmic Foundations, and Protocol Design for Mobile Networks and Mobile Computing

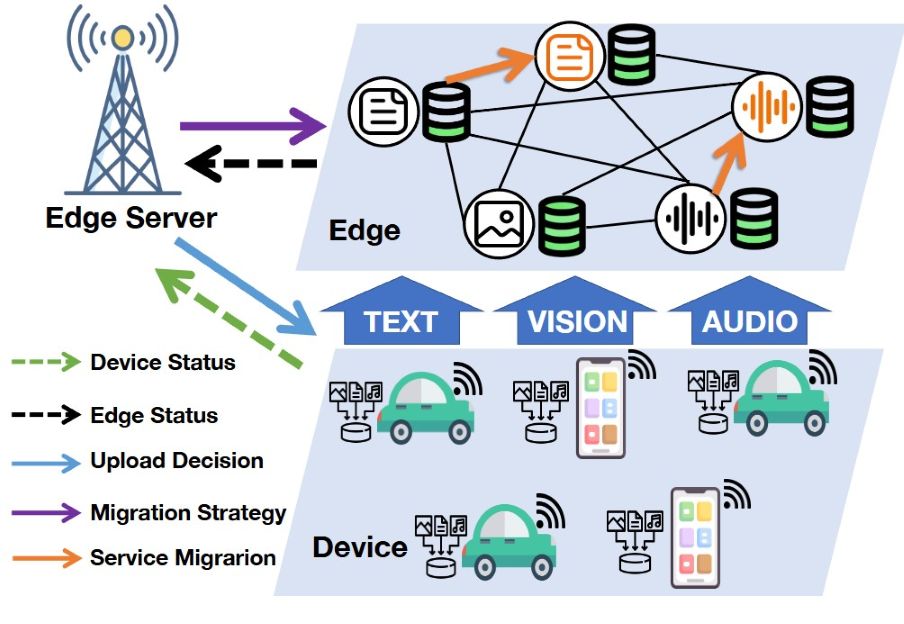

Future mobile edge computing (MEC) is envisioned to provide federated intelligence to delay-sensitive learning tasks with multimodal data. Conventional horizontal federated learning (FL) suffers from high resource demand in response to complicated multi-modal models. Multi-modal FL (MFL), on the other hand, offers a more efficient approach for learning from multi-modal…

Full abstract

Waste not, want not: service migration-assisted federated intelligence for multi-modality mobile edge computing

Hansong Zhou, Shaoying Wang, Chutian Jiang, Linke Guo, Yukun Yuan, Xiaonan Zhang

MobiHoc '23: Proceedings of the Twenty-fourth International Symposium on Theory, Algorithmic Foundations, and Protocol Design for Mobile Networks and Mobile Computing

Future mobile edge computing (MEC) is envisioned to provide federated intelligence to delay-sensitive learning tasks with multimodal data. Conventional horizontal federated learning (FL) suffers from high resource demand in response to complicated multi-modal models. Multi-modal FL (MFL), on the other hand, offers a more efficient approach for learning from multi-modal…

Full abstract

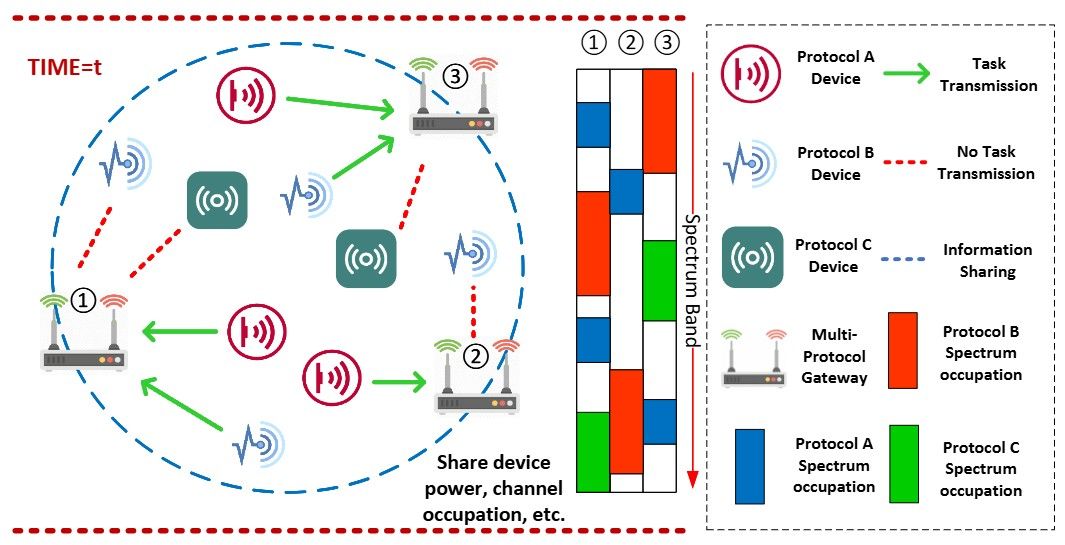

DQN-based QoE Enhancement for Data Collection in Heterogeneous IoT Network

Hansong Zhou, Sihan Yu, Linke Guo, Beatriz Lorenzo, Xiaonan Zhang

MASS '22: IEEE 19th International Conference on Mobile Ad Hoc and Smart Systems

Sensing data collection from the Internet of Things (IoT) devices lays the foundation to support massive IoT applications, such as patient monitoring in smart health and intelligent control in smart manufacturing. Unfortunately, the heterogeneity of IoT devices and dynamic environments result in not only the life-cycle latency but also data…

Full abstract

DQN-based QoE Enhancement for Data Collection in Heterogeneous IoT Network

Hansong Zhou, Sihan Yu, Linke Guo, Beatriz Lorenzo, Xiaonan Zhang

MASS '22: IEEE 19th International Conference on Mobile Ad Hoc and Smart Systems

Sensing data collection from the Internet of Things (IoT) devices lays the foundation to support massive IoT applications, such as patient monitoring in smart health and intelligent control in smart manufacturing. Unfortunately, the heterogeneity of IoT devices and dynamic environments result in not only the life-cycle latency but also data…